Create an AI Robot NPC using Hugging Face Transformers 🤗 and Unity Sentis

A NPC that understands your text orders

Using powerful AI models directly into the game is the new frontier in game development.

Think how immersive it will be to:

Have real-time conversations with NPC (non-playable characters) using chat models and AI voices.

Be able to talk with them directly with your voice.

Control your character through text or voice.

Although this was already possible thanks to APIs, there are two drawbacks:

Dependence on an internet connection, risking immersion disruption due to potential API lag.

Potential high cost associated with API usage, especially with many players.

Fortunately, Unity launched Sentis (former Barracuda), a neural network inference library where you can run AI models directly inside your game without relying on APIs.

And so today, we’re going to build this smart robot that can understand player orders and perform them.

This is what you’ll get at the end of this tutorial:

We will use an AI model that understands any text input and finds the most appropriate action on its list.

Contrary to classical game development, what’s interesting about that system is that you don’t need to hard-code every interaction. Instead, you use a language model that selects what robot’s possible action is the most appropriate given user input.

You can download the Windows demo 👉 here

To make this project, we’re going to use:

Unity Game Engine (2022.3 and +).

The Jammo Robot asset made by Mix and Jam.

Unity Sentis library, the neural network inference library that allow us to run our AI model directly inside our game.

The Hugging Face Sharp Transformers: a Unity plugin of utilities to run Transformer 🤗 models in Unity games.

You can find the complete Unity Project 👉 here

At the end of the project, you’ll build your intelligent robot game demo. And then, you’ll be able to iterate with other ideas.

For instance, after making this game, I created this Dungeon Escape demo ⚔️ with the same codebase, where your goal is to flee from this jail by stealing the 🔑 and the gold without getting noticed by the guard.

So, in the first part of this tutorial, we’ll understand the Sentence Similarity task and how it works. Then, we’ll learn how to build this demo.

Sounds fun? Let’s get started!

The power of Sentence Similarity 🤖

Before diving into the implementation, we must understand sentence similarity and how it works.

How does this game work?

In this game, we want to give more liberty to the player. Instead of giving an order to a robot by just clicking a button, we want him to interact with it through text.

The robot has a list of actions and uses a sentence similarity model that selects the closest action (if any) given the player’s order.

For instance, if I write, “Hey, grab me the red box”, the robot isn’t programmed to know what “Hey, grab me the red box” is. But the sentence similarity model connected this order and the “bring me red cube” action.

Therefore, thanks to this technique, we can build believable character AI without having the tedious process of mapping every possible player input interaction to robot response by hand. By letting the sentence similarity model do the job.

What is Sentence Similarity?

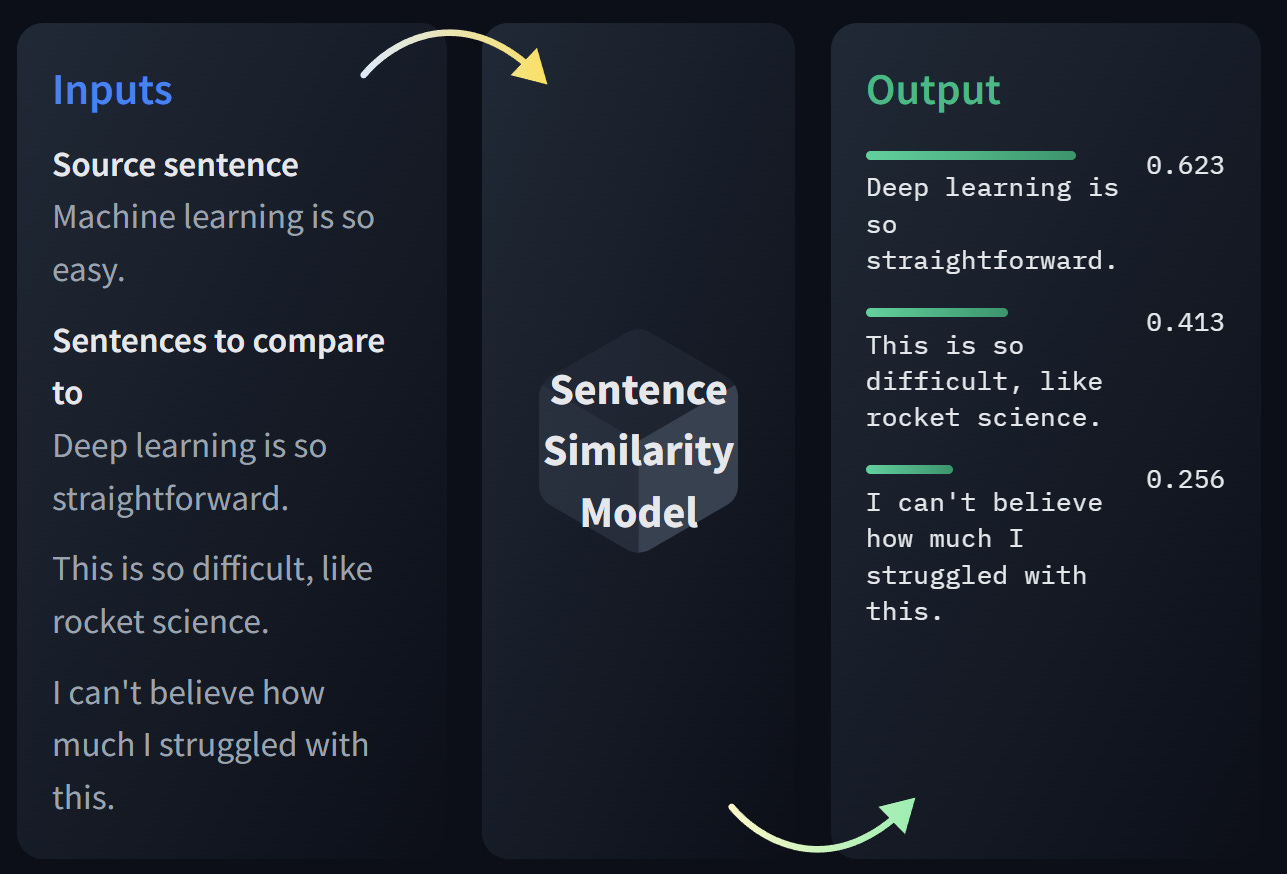

Sentence Similarity is a task able, given a source sentence and sentences, to calculate how similar sentences are to the source sentence.

For instance, if our source sentence (player order) is “Hey grab me the red box” it’s very close to the “Bring red box” sentence.

Sentence similarity models convert input text “Hello” into vectors (embeddings) that capture semantic information. We call this step to embed. Then, we calculate how close (similar) they are using cosine similarity.

I’ll not go into the details, but since our sentence similarity models produce vectors. We can calculate the cosine of the angle between two vectors. The closer the result is to 1, the more similar these two vectors are.

If you want to dive deeper into the sentence similarity task, check this 👉 https://huggingface.co/tasks/sentence-similarity

The Complete process

Now that we understand Sentence Similarity let's see the entire process: from how a player inputs an order to the robot acting.

The player types an order: "Can you bring me the red cube?"

The robot has a list of actions ["Hello", "Happy", "Bring the red cube", "Move to the red cube"]

What we want to do then is to embed this player input text to compare to the robot action list to find the most similar action (if any).

To do that we tokenize the input: a Transformer model can't take a string as input. It needs to be translated into numbers. This is done using the Tokenizer code provided by Sharp Transformers.

Then, the input (tokenized) is passed to the model that outputs an embedding of this text, a vector that captures semantic information about the text. This inference part is done by Unity Sentis.

Now we can compare this vector with other vectors (from the action list) using cosine similarity.

We select the action with the highest similarity and get the similarity score.

If the similarity score > 0.2, we ask the robot to perform the action.

Otherwise, we ask the robot to perform "I'm perplexed" animation. Since the order given is too different from the action list (it can be the case if the player for instance writes something totally irrelevant like "do you like rabbits?".

The AI Model used

For this demo, we're using all-MiniLM-L6-v2.

It's a BERT Transformer model. It's already trained so we can use it directly

I already provided you with an ONNX file, so you don't need to convert it.

Let's build our smart robot demo 🤖

Step 0: Get the project

You can find the 👉 complete Unity project here

Step 1: Install Unity Sentis

The Sentis Documentation 👉 https://docs.unity3d.com/Packages/com.unity.sentis@latest

Open the Jammo project

Click Sentis Pre-Release package or go to Window > Package Manager, click the + icon, select "Add package by name…" and type "com.unity.sentis"

Press the Add button to install the package.

Step 2: Install Sharp Transformers 💪

Sharp Transformers is a Unity plugin of utilities to run Transformer 🤗 models in Unity games.

We need to do it for the tokenization step.

Go to "Window" > "Package Manager" to open the Package Manager.

Click the "+" in the upper left-hand corner and select "Add package from git URL".

Enter the URL of this repository and click "Add": https://github.com/huggingface/sharp-transformers.git

Step 3: Build the inference process 🧠

As described in Sentis documentation, to use Sentis to run a neural network in Unity, we need to follow these steps.

Use the

Unity.Sentisnamespace.Load a neural network model file.

Create input for the model.

Create an inference engine (a worker).

Run the model with the input to infer a result.

Get the result.

In our case, we do all of this in SentenceSimilarity.cs file, that will be attached to our robot.

In awake, we:

Load our neural network

Create an inference engine (a worker).

Create an operator, that will allow us to perform operations with tensors.

We have three functions:

Encode: takes our player input (text), tokenizes it, and embeds it.

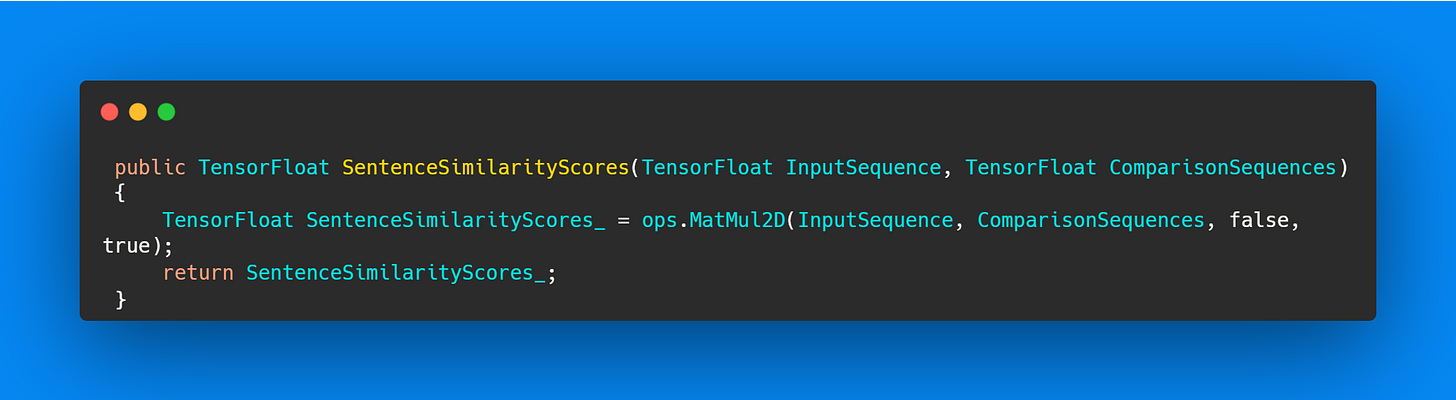

SentenceSimilarityScores: calculate the similarity scores between the input embed (what the user typed) and the comparison embeds (the robot action list)

RankSimilarityScores: get the most similar action and its index given the player input

Thanks to these four functions we are able to perform sentence similarity. Now we just need to define our robot behavior based on the actions.

Step 4: Build the Robot Behavior 🤖

We need to define the behavior of our robot.

The idea is that our robot has different possible actions and the choice of the actions will depend on most similar actions.

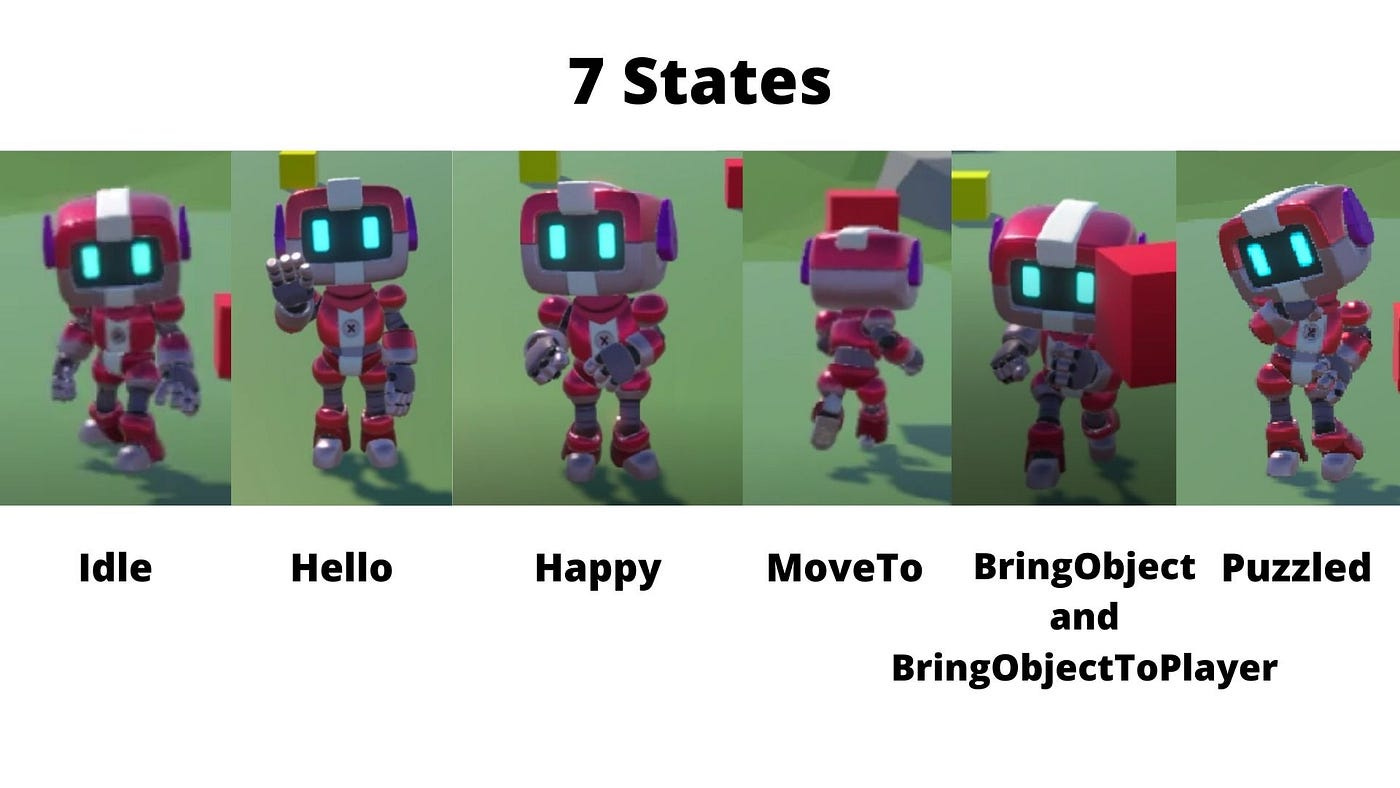

We need first to define the Finite State Machine, a simple AI where each State defines a certain behavior.

Then, we’ll make the utility function that will select the State hence the series of actions to perform.

The State Machine 🧠🤖

In a state machine, each state represents a behavior, for instance, moving to a column, saying hello, etc. Based on the state the agent is it will perform a series of actions.

In our case, we have 7 states:

The first thing we need to do is create an enum called State that contains each of the possible States:

Because we need to constantly check the state, we define the state machine into the Update() method using a switch system where each case is a state.

For each state case, we define the behavior of our agents, for instance in our state Hello, the robot must move towards the player, face him correctly, and then launch its Hello animation, then go back to an Idle State.

We have now defined the behavior for each different State. The magic here will come from the fact that’s the language model that will define what State is the closest to the Player input. And in the utility function, we call this state.

Let’s define the Utility Function 📈

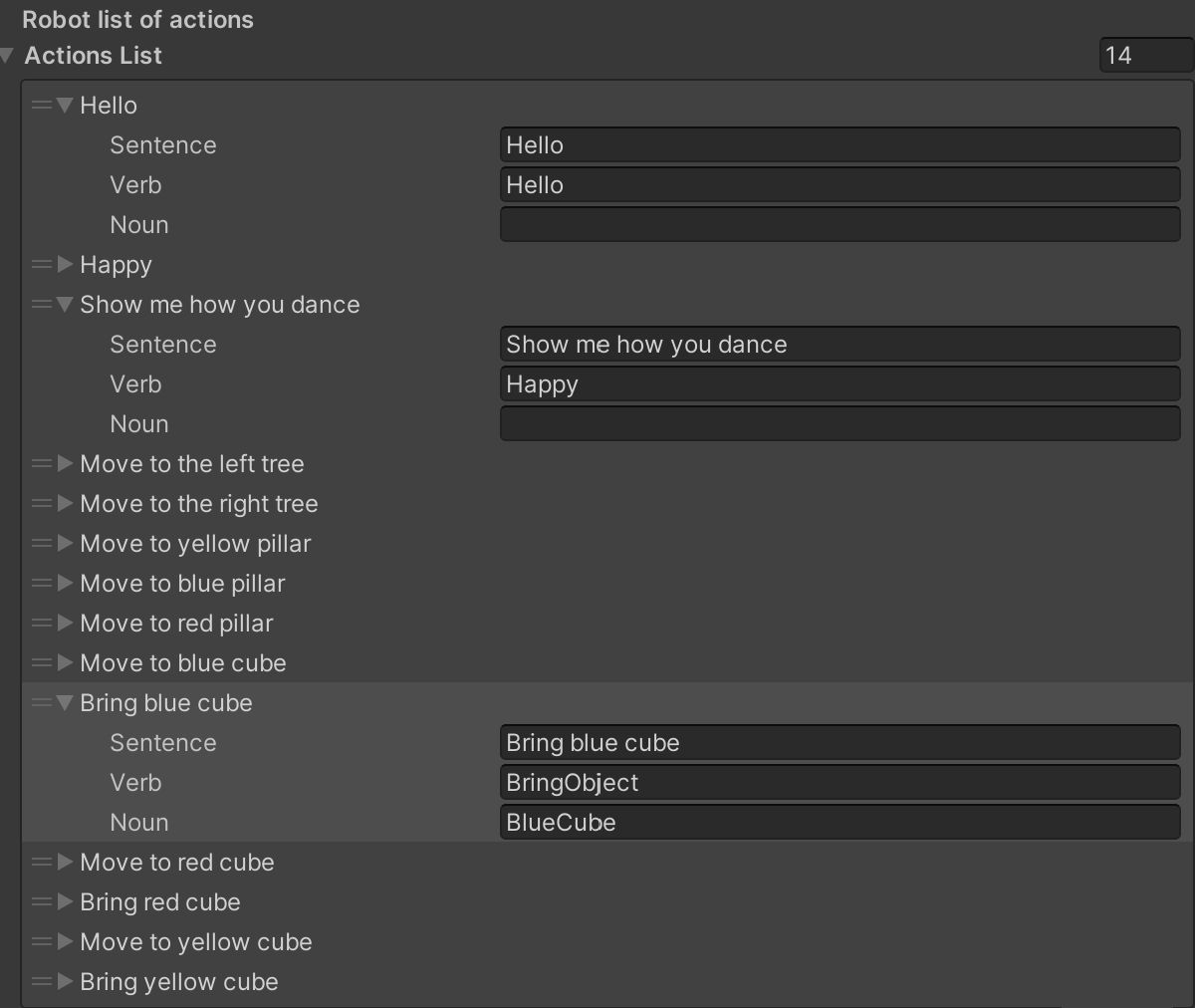

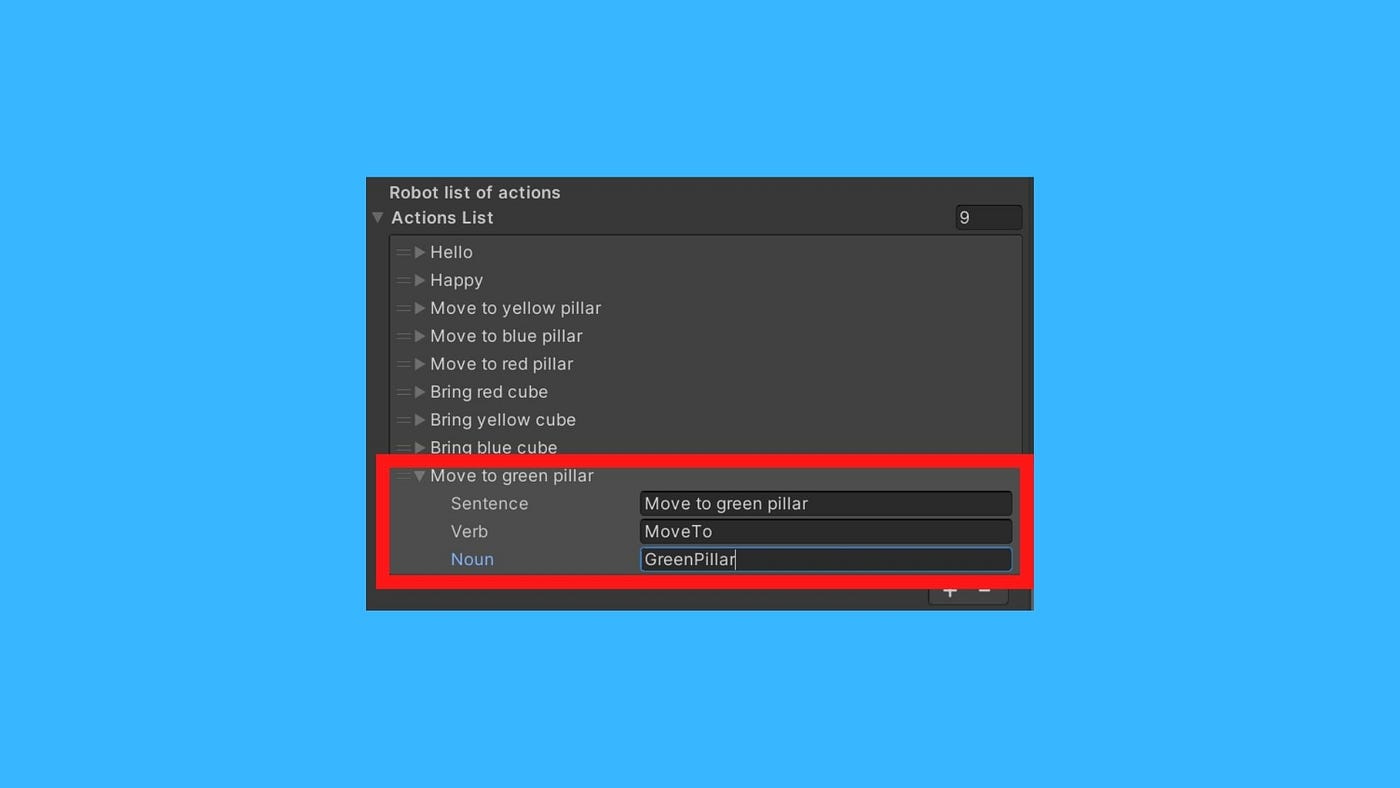

Our action list looks like this:

The sentence is what will be embedded in the AI model.

The verb is the State

Noun (if any) is the object to interact with (Pillar, Cube, etc.)

This utility function will select the Verb and Noun associated with the sentence having the highest similarity score with the player input text.

But first, to get rid of a lot of strange input text, we need to have a similarity score threshold.

For example, if I say “Look at all the rabbits”, none of our possible actions are relevant. Hence instead of choosing the action with the highest score, we’ll call the State Puzzled which will animate the robot with a perplexed animation.

If the score is higher, then we’ll get the verb corresponding to a State and the noun (goalObject) if any.

We set the state corresponding to the verb. That will activate the behavior corresponding to it.

And that’s it, now we’re ready to interact with our robot!

Step 5: Let’s interact with our Robot 🤖

In this step, you just need to click on the play button in the editor. And you can prompt some orders and see the results.

Improving the game: let’s add a new action

Let’s take an example:

Copy the YellowPillar game object and move it

Change the name to GreenPillar

Create a new material and set it to green

Place the material on GreenPillar

Now that we’ve placed the new game object, we need to add this possibility into the sentences and click on Jammo_Player.

In the list of actions click on the plus button and fill in this new action item:

Add Go to the green column

GoTo

GreenColumn

And that’s it! It means you can easily iterate and add more actions to your game.

Now that you understand how this project works. Don’t hesitate to iterate to make something different.

For instance, with the same codebase, I’m creating this Dungeon Escape game where your goal is to flee from this jail by stealing the key, and the gold without getting noticed by the guard.

I’m planning to publish it in the future, so don’t hesitate to follow me on Twitter.

Congratulations, with a few steps we’ve just built a robot that’s able to perform actions based on your orders that’s amazing!

What you can do next is dive deeper into the code in Scripts/Final and look at the final scene in Scenes/Final. I commented on every part of it so it should be relatively straightforward.

Also, don’t hesitate to check Unity Sentis Samples, a repository that contains very nice example and template projects.

This tutorial is part of the Making Games with AI Course. A free upcoming course on creating AI-powered games for Unity and Unreal. Don’t forget to sign up 👉 here

If you liked the article ❤️ it and share it and follow me. And don’t hesitate to ⭐ Sharp Transformers GitHub repository that helps the discoverability 🤗

Finally, don’t hesitate to join our Discord community to exchange with game developers interested in using AI 👉 https://hf.co/join/discord

See you next time,

Keep Learning, Stay Awesome

Unity Sentis is now in open beta.

No sign-up required: https://discussions.unity.com/t/about-sentis-beta/260899

The function "SentenceSimilarityUtils_" doesn't seem to exist anywhere.